30/09/2022

Along channel component of the flow-velocity around a cylinder (Re = 100) computed with a Physics Informed Neural Network and with a classical numerical model.

One of the most appealing advances in Machine Learning over the past 5 years concerns the development of physics informed neural networks (PINNs). In essence, these efforts have amounted into methods which allow to enforce governing physical and chemical laws into the training of neural networks. The approach unveils many advantages especially considering inverse problems or when observational data providing boundary conditions are noisy or gappy.

In the following, a short primer is provided on the prevailing methods, applications and possible useful frameworks. References on which the following is based are provided at the end for further reading.

Modelling and forecasting over a broad range of themes such as civil engineering, earth sciences, epidemic modelling (COVID 19), multibody physics, electrical engineering, … all (for their lions share) boil down to a formulation of the problem in terms of partial differential equations (PDEs). And, subsequently, solving these PDEs by means of analytical or numerical (discretization) techniques.

Despite the continuous progress in methods, computational power and (commercially) available software tools, numerically solving PDEs still have limits in terms of applicability to real life phenomenon. Limitations mainly result from (i) prohibitive computational costs, (ii) complexity in mesh generation, (iii) problems to account for incomplete boundary conditions, (iv) inverse modelling with hidden physics.

Due to the gigantic leaps in advancement of data-driven models (i.e. deep learning architectures), machine learning has progressed to a level that it could be considered a propitious alternative. Yet, machine learning models may faulter when applied outside the boundaries of the data used to train the model, leading to physical inconsistent or implausible predictions. Moreover, the most inspiring advances in deep learning resulted by exploiting an abundance of data; which is not always available.

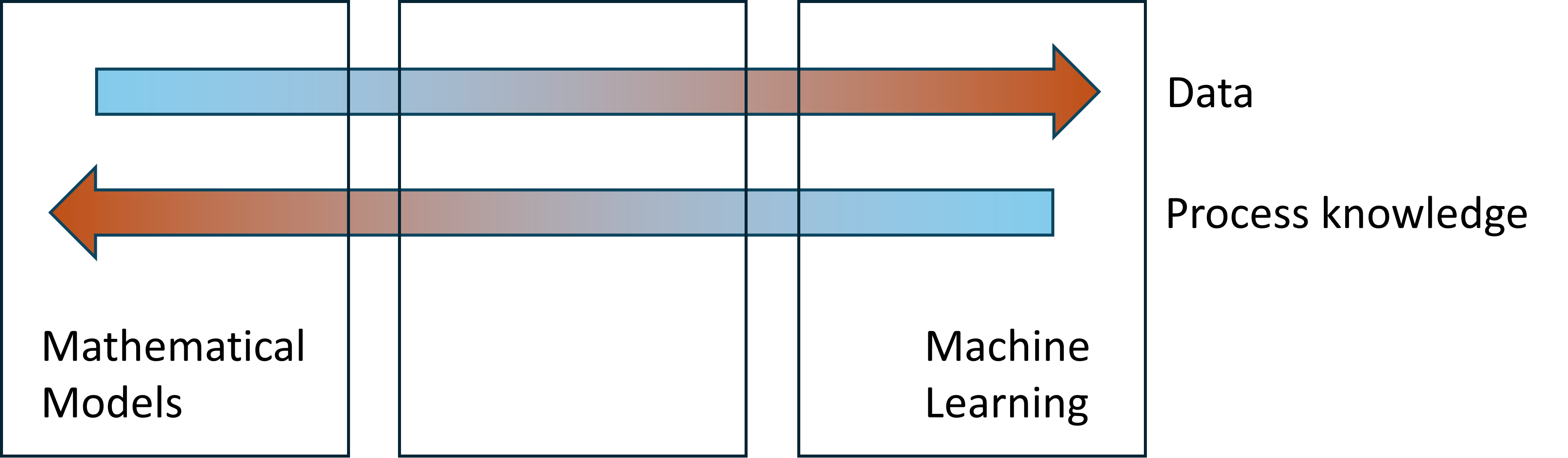

Schematic illustration of three possible categories a problem can be categorized; going from (left) problems where observations are scarce but governing laws are well defined to (right) problems with abundance of data and little know of the physics.

Altogether, a missing piece of the puzzle comprises methods which allow to integrate domain knowledge and information of the fundamental governing laws into the training of machine learning models; i.e. physics informed learning.

One approach of physics informed learning seeks to specifically design a neural network architecture to embed any a prior knowledge. For instance Beuckler et al. (2021) embedded the conservation of mass and energy in the architecture of a Deep Neural Network applied to convective processes for climate modeling.

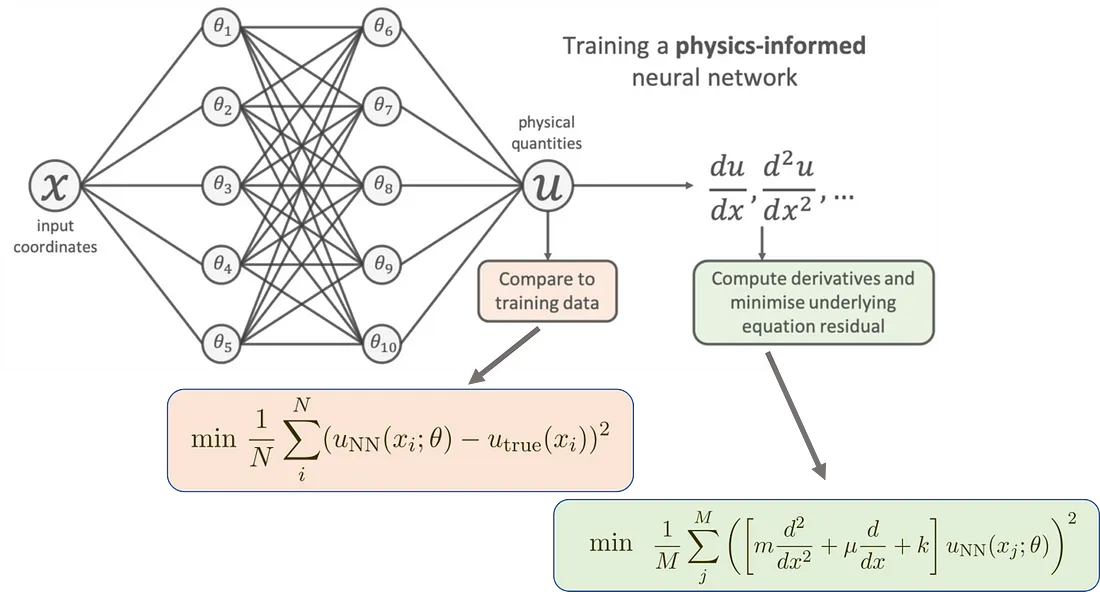

Physics Informed Neural Networks (PINNs) provide a second methodology to enable physics informed learning. In this approach, information of the governing laws is embedded in a neural network by adding a loss function related to the differential equation under consideration. The advantage of this approach is that it requires less elaborated implementations.

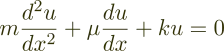

To illustrate the concept, we consider the problem of a damped harmonic oscillator. Mathematically, this problem is governed by the following differential equation:

Differential equation governing the dynamics of a damped harmonic oscillator, where m and k are the mass and spring constant, respectively. The Greek symbol μ describes the friction coefficient.

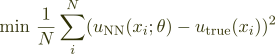

Considering the problem as a machine learning engineer, if we have various observations of u at distinct points x, we could train a machine learning model (neural network) to predict these points (and hopefully the underlying dynamics). This is done by training a model to minimize the mean square error between the network outcome and the training observations:

Differential equation governing the dynamics of a damped harmonic oscillator, where m and k are the mass and spring constant, respectively. The Greek symbol μ describes the friction coefficient.

Here, the considered points are sampled points lying at the initial or boundary locations and in the entire domain of interest.

Schematic illustration of the concept of Physics Informed Neural Networks. A loss function is considered which includes a supervised loss of data measurements of u from the initial and boundary conditions, and an loss computed by evaluating the differential equation sampled at a set of training locations {x} within the region under consideration.

Physics Informed Neural Networks is an active domain of research, and it appears a consolidation on the to-go framework is still ongoing. Possible frameworks , however, for python include NeuroDiffEq, PyDEns, IDRLnet.

Hereafter, we present results considering DeepXDE which appears a mature library and allows for various automatic differentiation backbones (e.g. PyTorch, TensorFlow, JAX).

In DeepXDE, the damped harmonic oscillator problem can be implemented considering the following:

import deepxde as dde # define ode def ode_oscillator(t, y): dy_dt = dde.grad.jacobian(y, t) d2y_dt2 = dde.grad.hessian(y, t) return d2y_dt2 + 4 * dy_dt + 400 * y # boundary conditions: ## y(t=0) = 1 ic1 = dde.icbc.IC( geom, lambda y: 1, lambda _, on_initial: on_initial ) ## dy/dt (0) = 0 def boundary_l(t, on_initial): return on_initial and np.isclose(t[0], 0) def bc_func2(inputs, outputs, X): return dde.grad.jacobian(outputs, inputs, i=0, j=None) ic2 = dde.icbc.OperatorBC(geom, bc_func2, boundary_l) # observations (computed considering the reference solution) observed_bc = dde.icbc.boundary_conditions.PointSetBC( time2evaluate, observed_values) # define the geometry geom = dde.geometry.TimeDomain(0, 1.25)

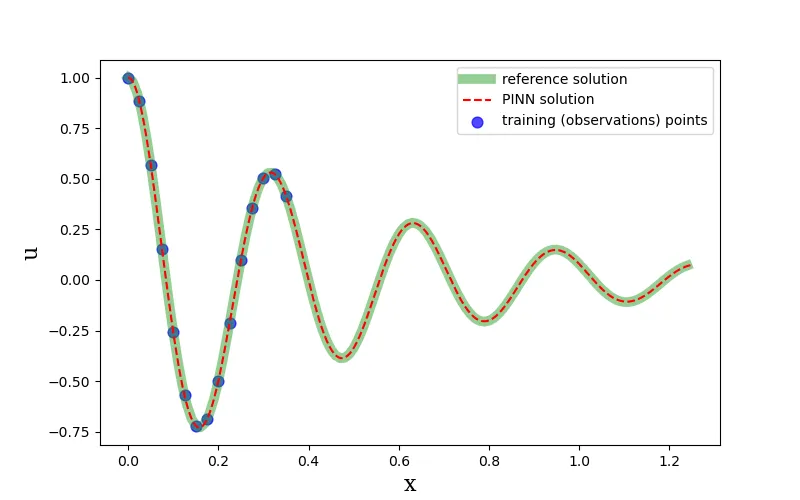

After training the PINN, we find the following result, which is quite satisfying also outside the region where observational points are available.

At PropheSea, we believe that by combining software with mathematics, we can create a positive impact. Our applications are implemented across a broad range of industries and help our clients improve their processes.

Physics Informed Neural Networks are an appealing methodology to tackle problems without an abundance of data but do have prior knowledge that can be integrated in the solution. At PropheSea this methodology is adopted for instance for digital twins of thermal industrial assets. An illustrative example of this approach is provided here.

Other common approaches which combine mathematical modelling with machine learning technology comprise surrogate modelling. An illustrative industry example on how PropheSea combined process-based models with machine learning technology considering this methodology is provided here.